Meta, the company that owns Facebook and Instagram, said Tuesday it will stop using its paid fact-checkers to edit user content and move to a “community notes” model similar to X/Twitter, where users can add their own notes and corrections to posts.

Facebook, Threads, and Instagram have a reputation among conservative users for harsh treatment of their social media comments by the contracted fact-checkers used by Meta. The company’s practices, for instance, have led some users like Must Read Alaska to not post political stories to the Meta-owned sites as frequently, due to the company’s censorship and shadow-banning, and “Facebook Jail” practices.

Must Read Alaska has found that Facebook censorship is real, and it is applied to conservative voices. Since 2021, Must Read content has been throttled back by Meta and user engagement has only occurred because people seek out the Must Read Alaska page.

In fact, stories like this are among the types we chose not to put on the Facebook platform because of the likelihood it would be removed, hidden, or would get a “strike” that could put MRAK in “Facebook Jail.”

“We’re replacing fact checkers with Community Notes, simplifying our policies and focusing on reducing mistakes,” said Meta majority owner and CEO Mark Zuckerberg. “Looking forward to this next chapter.”

Starting in the U.S., the company is ending its third party fact-checking program. The company said it will “allow more speech by lifting restrictions on some topics that are part of mainstream discourse and focusing our enforcement on illegal and high-severity violations.”

In another change, the company “will take a more personalized approach to political content, so that people who want to see more of it in their feeds can.”

Zuckerberg said, “In recent years we’ve developed increasingly complex systems to manage content across our platforms, partly in response to societal and political pressure to moderate content. This approach has gone too far. As well-intentioned as many of these efforts have been, they have expanded over time to the point where we are making too many mistakes, frustrating our users and too often getting in the way of the free expression we set out to enable. Too much harmless content gets censored, too many people find themselves wrongly locked up in ‘Facebook jail,’ and we are often too slow to respond when they do.”

Zuckerberg said he wants to return to “that fundamental commitment to free expression.”

When Facebook launched the independent fact checking program in 2016, it said it did not want it to be the arbiters of truth. The company thought it was a reasonable choice to hand over the fact-checking to third parties and tamp down the misinformation and hoaxes that can be seen online.

“That’s not the way things played out, especially in the United States. Experts, like everyone else, have their own biases and perspectives. This showed up in the choices some made about what to fact check and how. Over time we ended up with too much content being fact checked that people would understand to be legitimate political speech and debate. Our system then attached real consequences in the form of intrusive labels and reduced distribution. A program intended to inform too often became a tool to censor,” Zuckerberg said in a statement.

“We are now changing this approach. We will end the current third party fact checking program in the United States and instead begin moving to a Community Notes program. We’ve seen this approach work on X – where they empower their community to decide when posts are potentially misleading and need more context, and people across a diverse range of perspectives decide what sort of context is helpful for other users to see. We think this could be a better way of achieving our original intention of providing people with information about what they’re seeing – and one that’s less prone to bias,” he said.

He outlined the rollout:

- Once the program is up and running, Meta won’t write Community Notes or decide which ones show up. They are written and rated by contributing users.

- Just like they do on X, Community Notes will require agreement between people with a range of perspectives to help prevent biased ratings.

- He said Meta intends to be transparent about how different viewpoints inform the Notes displayed in its apps, and are working on the right way to share this information.

- People can sign up Jan. 7 (Facebook, Instagram, Threads) for the opportunity to be among the first contributors to this program as it becomes available.

“We plan to phase in Community Notes in the US first over the next couple of months, and will continue to improve it over the course of the year. As we make the transition, we will get rid of our fact-checking control, stop demoting fact checked content and, instead of overlaying full screen interstitial warnings you have to click through before you can even see the post, we will use a much less obtrusive label indicating that there is additional information for those who want to see it,” Zuckerberg said.

Zuckerberg admitted that his company has been over-enforcing its rules, and censoring “legitimate political debate and censoring too much trivial content and subjecting too many people to frustrating enforcement actions.”

In December alone, the company removed millions of pieces of content every day, he said. He believes up to 20% of those removals were mistakes in judgment by fact-checkers/censors.

“While these actions account for less than 1% of content produced every day, we think one to two out of every 10 of these actions may have been mistakes (i.e., the content may not have actually violated our policies). This does not account for actions we take to tackle large-scale adversarial spam attacks. We plan to expand our transparency reporting to share numbers on our mistakes on a regular basis so that people can track our progress. As part of that we’ll also include more details on the mistakes we make when enforcing our spam policies,” he said.

“We want to undo the mission creep that has made our rules too restrictive and too prone to over-enforcement. We’re getting rid of a number of restrictions on topics like immigration, gender identity and gender that are the subject of frequent political discourse and debate. It’s not right that things can be said on TV or the floor of Congress, but not on our platforms. These policy changes may take a few weeks to be fully implemented,” Zuckerberg said.

“We’re also going to change how we enforce our policies to reduce the kind of mistakes that account for the vast majority of the censorship on our platforms. Up until now, we have been using automated systems to scan for all policy violations, but this has resulted in too many mistakes and too much content being censored that shouldn’t have been. So, we’re going to continue to focus these systems on tackling illegal and high-severity violations, like terrorism, child sexual exploitation, drugs, fraud and scams. For less severe policy violations, we’re going to rely on someone reporting an issue before we take any action. We also demote too much content that our systems predict might violate our standards. We are in the process of getting rid of most of these demotions and requiring greater confidence that the content violates for the rest. And we’re going to tune our systems to require a much higher degree of confidence before a piece of content is taken down. As part of these changes, we will be moving the trust and safety teams that write our content policies and review content out of California to Texas and other US locations,” he said.

People are often given the chance to appeal the enforcement decisions, but the process can be frustratingly slow and doesn’t always get to the right outcome, he admitted.

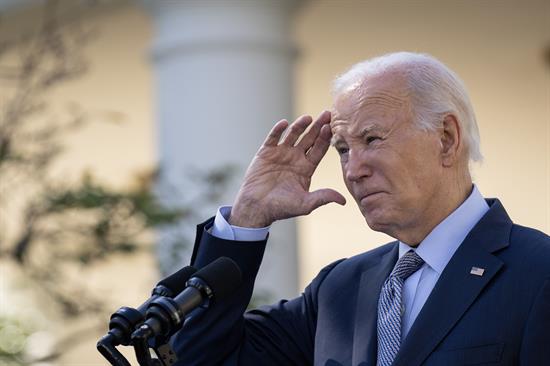

As for political content, Meta, since Biden was sworn into office in 2021, has reduced the amount of information people can see about elections, politics or social issues. Zuckerberg said that is what users told the company it wanted.

“But this was a pretty blunt approach. We are going to start phasing this back into Facebook, Instagram and Threads with a more personalized approach so that people who want to see more political content in their feeds can,” Zuckerberg said.

“We’re continually testing how we deliver personalized experiences and have recently conducted testing around civic content. As a result, we’re going to start treating civic content from people and Pages you follow on Facebook more like any other content in your feed, and we will start ranking and showing you that content based on explicit signals (for example, liking a piece of content) and implicit signals (like viewing posts) that help us predict what’s meaningful to people. We are also going to recommend more political content based on these personalized signals and are expanding the options people have to control how much of this content they see,” he said.